We know from past research that GPT-4 beats other models in forecasting stock price movements based on news sentiment.

In this article, I am investigating its stock-picking abilities. As a usual disclaimer, this article is not investment advice, merely an academic exercise.

The question I am asking is whether GPT-4 can generate reasonable investment portfolios given a description of the current market environment. I call this retrieval-augmented portfolio generation (RAPG).

Today is the end of January, the time when Chief Investment Officers at asset management firms publish their investment outlooks for the year ahead. Their reports are usually well-researched and informative but rarely actionable. It's the responsibility of stock pickers and asset managers to turn them into investment portfolios.

Large language models are good at extracting insights from long texts and synthesizing knowledge by making connections across domains and concepts.

Can we ask GPT-4 to read an investment outlook report from a wealth management firm and generate a reasonable investment portfolio?

To answer this question, I picked four of last year’s, January 2023, investment outlook reports from respected wealth management firms. I fed each document to GPT-4 and asked the model to generate an aggressive growth portfolio based on the information contained in the document.

GPT-4 training data was cut off in April 2023, so there is some risk of lookahead bias in the model’s judgement. I could not use older versions of the model due to their context size limitations.

Here is the PyAQ script that was used to generate the portfolios:

https://github.com/cherevik/rapg/blob/main/generate_portfolios.yml

The script uses the self-consistency chain of thought prompting approach. It instructs the model to think step-by-step and do the following:

- Identify major trends in the market environment described in the report

- Use these insights to develop several aggressive investment hypotheses

- Translate the hypotheses into an aggressive growth investment portfolio

For consistency, the script generates five such portfolios and takes their average. See the “count: 5” setting in the generate activity of the PyAQ script.

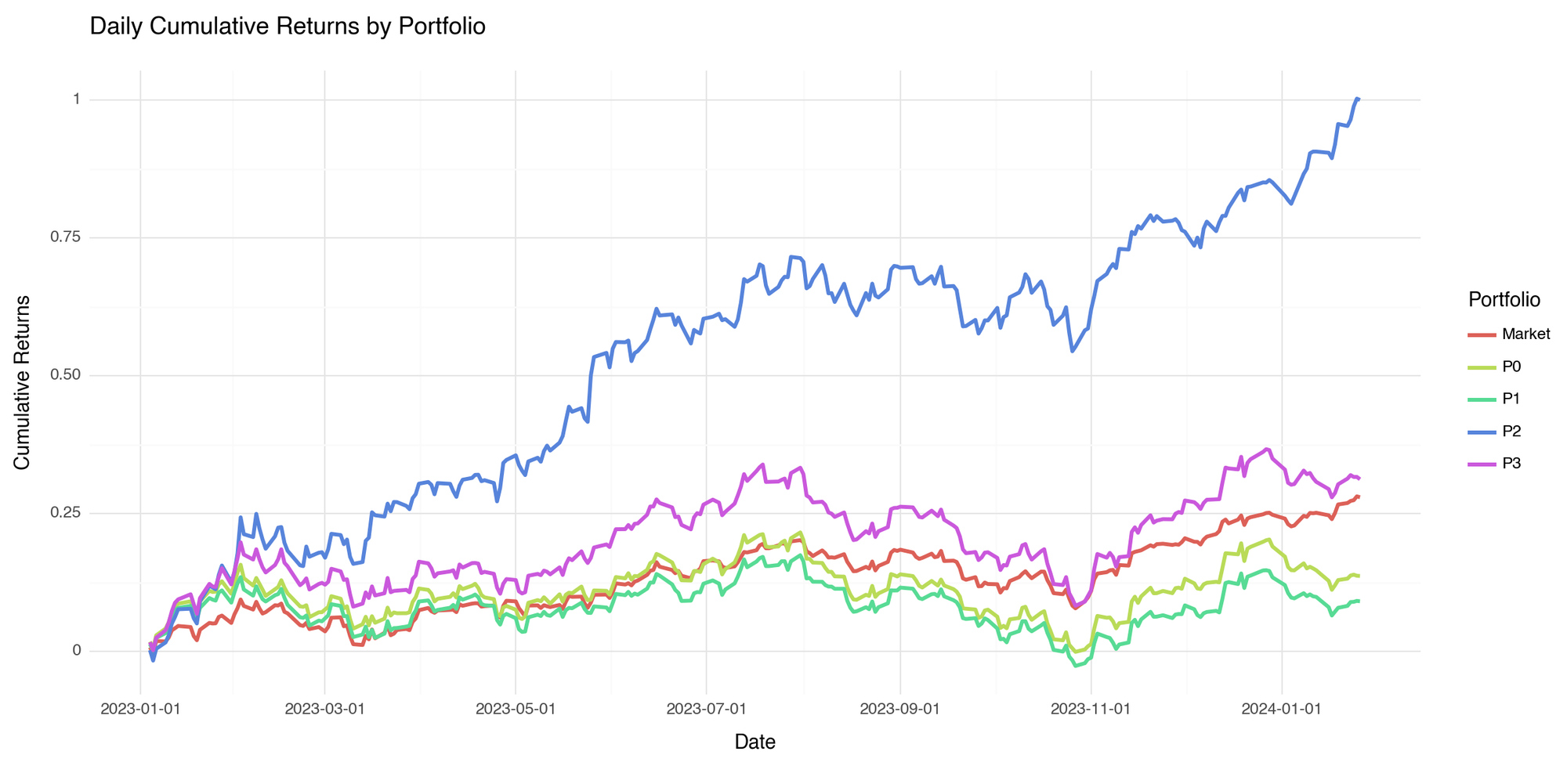

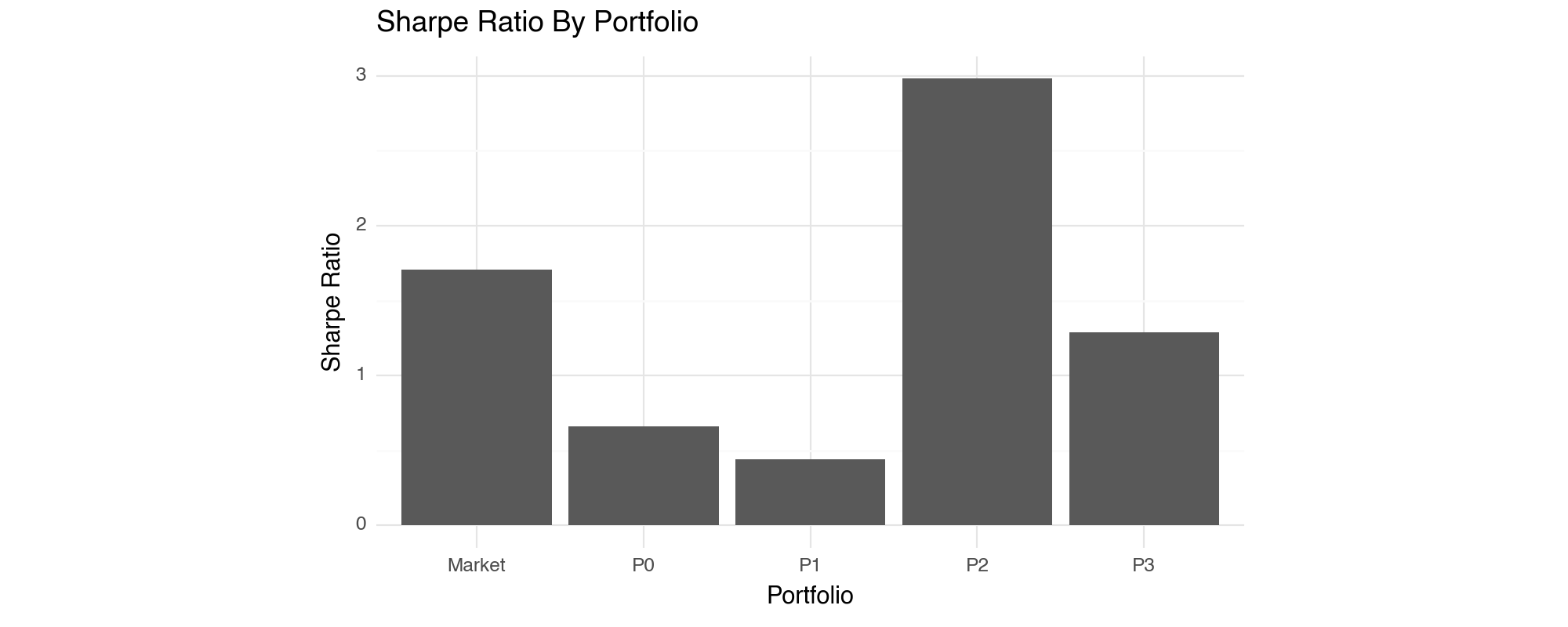

To estimate the quality of the model’s stock-picking ability, I back tested the portfolios against last year’s stock data. The results are in the chart below.

Among the four portfolios, two delivered below-the-market performance, and two beat the market, one of them quite spectacularly. The ones that underperformed the market still delivered positive returns.

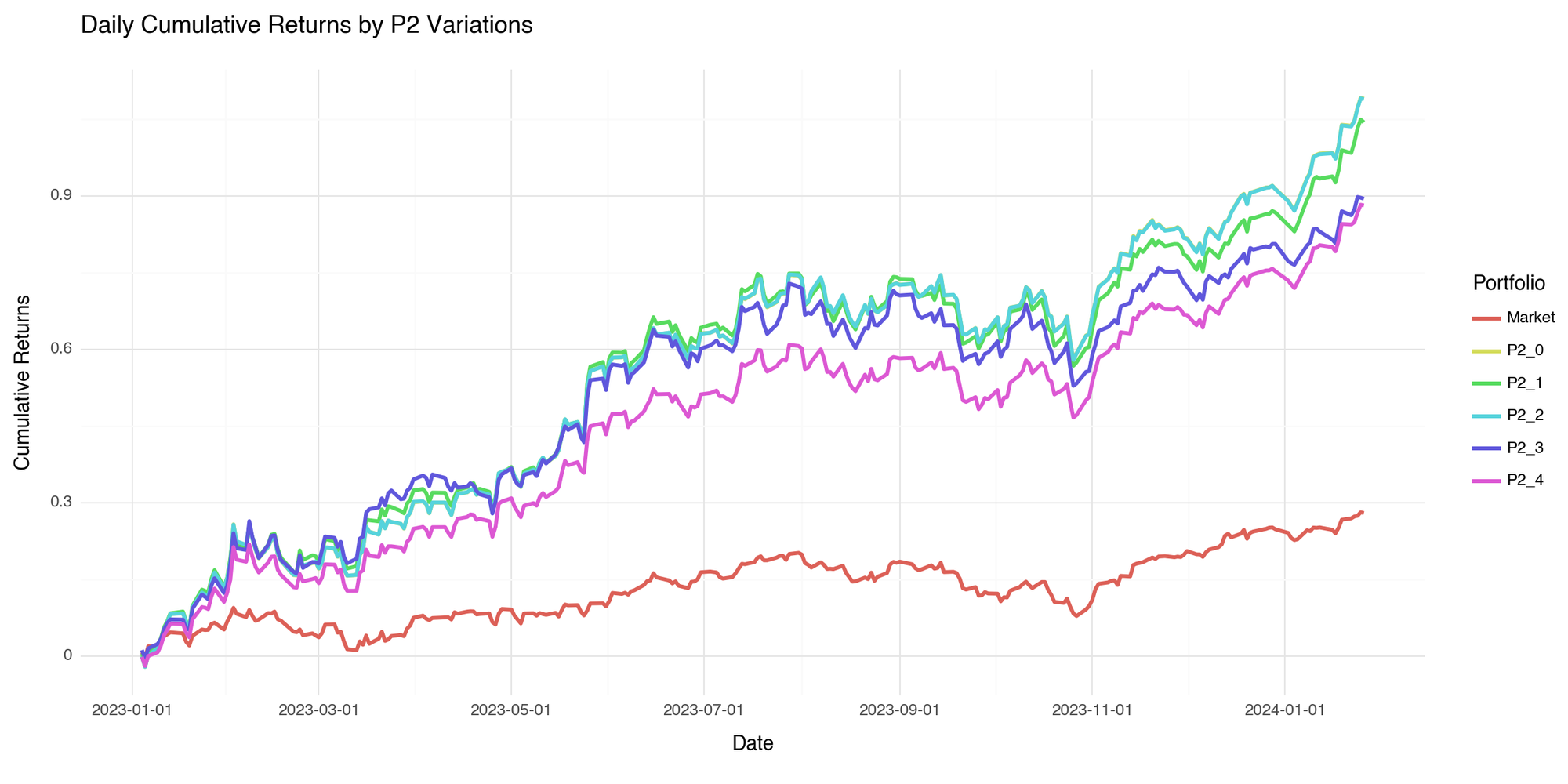

Next, I wanted to see how consistent the model was in making its recommendations. In other words, how similar were the results of the five portfolios the model generated based on one investment outlook report?

Here are the results for variations of the second portfolio.

As you can see, all five variations were rather consistent in their performance.

What was in this one report that made this particular portfolio perform so well?

Apparently, this was the only report, out of the four, that mentioned Artificial Intelligence as a major trend that would impact the market in 2023. As a result, GPT-4 constructed a portfolio that was overweight on NVDA, MSFT, and GOOGL.

You can find the portfolios generated by the model and the Python notebook that I used to analyze them at this link:

https://github.com/cherevik/rapg/tree/main

So, to answer our original question, based on our back-of-the-envelope investigation, retrieval-augmented portfolio generation (RAPG) does hold some promise.

An investment outlook report that provided prescient insight and conviction enabled the model to generate an impactful portfolio. Reports that lacked similar insight but delivered competent analysis resulted in portfolios that performed on par with the market.

This leads us to the next question.

Can a large language model assist humans in generating prescient insight and conviction?

Stay tuned …