If you were impressed by the first generation of large language models, wait till you interact with a multi-modal one, such as Gemini, GPT4V, or LlaVA.

In addition to text, these models can process and understand images, videos, and speech, which presents new opportunities for workflow automation.

Take, for instance, the vehicle damage assessment report that must be completed by an insurance adjuster at the place of an automobile accident. The report is used to estimate repair costs and determine whether a vehicle is repairable or considered a total loss.

The current process consists of the adjuster taking several pictures at the place of the accident and typing up the report containing the description of the damage, cause of the damage, assessment of repairability, cost estimate, an estimated timeline for completing the repairs, and other information.

With a multi-modal large language model, many of these steps can be automated. To illustrate this point, let’s build a simple app in PyAQ, a low-code platform for cognitive apps by AnyQuest.

As the first step, we must give the app an insurance adjuster persona. We accomplish this by entering the corresponding instructions in the header of the app:

info:

id: aq.apps.accident_report

version: 1.0.0

title: Accident Report Application

profile: >

You are an auto insurance adjuster.

Your job is to assess the vehicle damage at the site of an accident.

Next, we must instruct the app to use a multi-modal model:

models:

gpt4v:

model: gpt-4-vision-preview

provider: openai

At the level of abstraction provided by PyAQ, replacing this model with a different one is a matter of changing a couple of lines.

We strongly advise experimenting with models from different providers. Their relative price/performance varies broadly from use case to use case.

Finally, we need to provide a flow of tasks used to create the report:

- Read an image file

- Prompt the model to generate a damage assessment

- Save the report as an HTML document

In PyAQ, this looks as follows:

activities:

read_image:

type: read

report_damage:

type: generate

inputs:

- activity: read_image

models:

- gpt4v

parameters:

temperature: 0.5

prompt: >

Provide a detailed description of the damage

to the vehicle in the attached image.

write_answer:

type: write

inputs:

- activity: read_image

- activity: report_damage

parameters:

format: html

template: |

## DAMAGE REPORT

{{report_damage}}

In this example, we create a description of the damage based on one image.

The app can be easily extended to consume multiple images and launch several instances of the “generate” activity to fill out the various sections of the damage assessment report. In addition, we could place the insurance company policies and guidelines into the app's memory to guide the generation process.

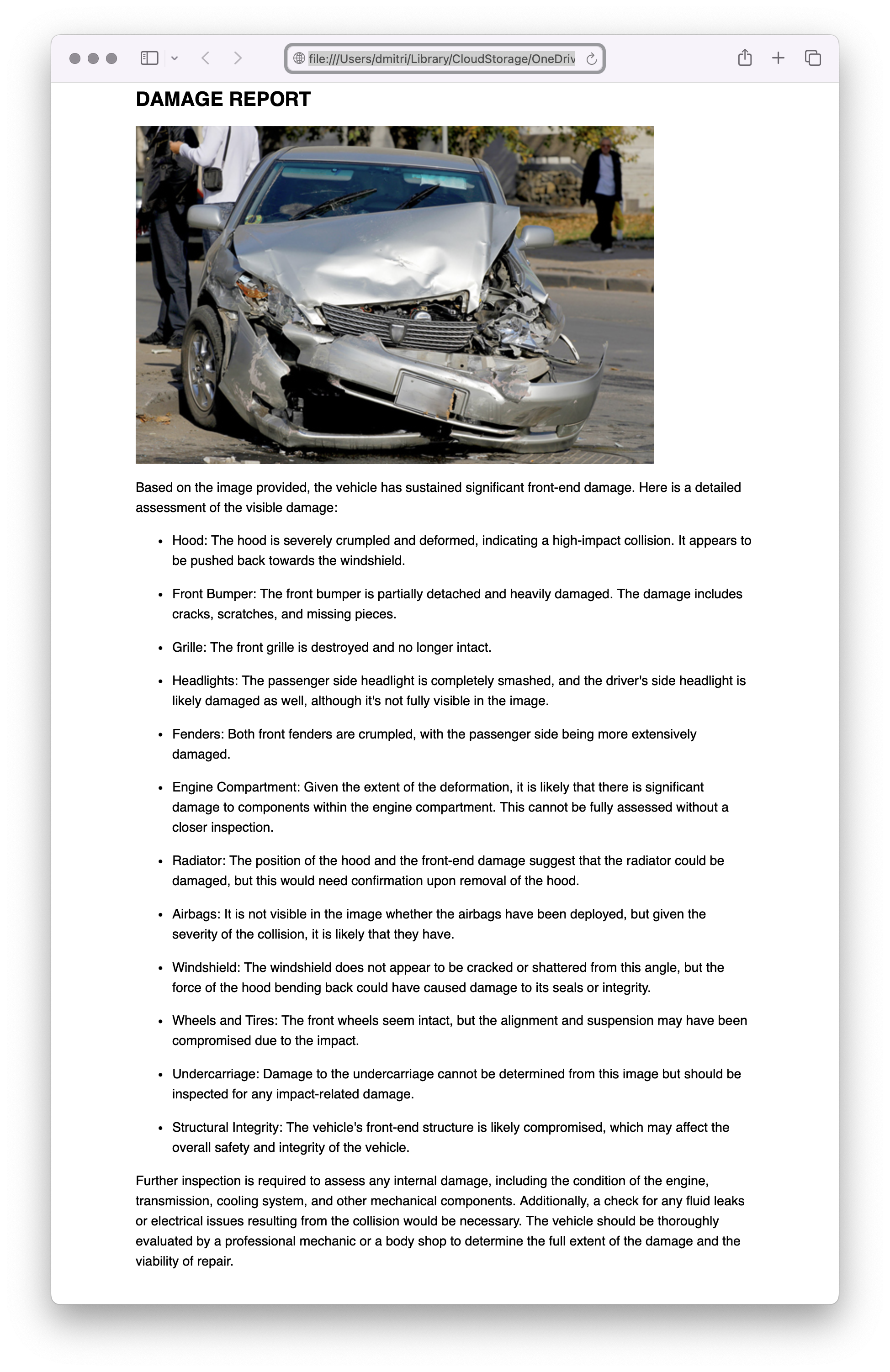

If we run this app on our car accident image, we get a rather detailed description of the damage that would make any insurance adjuster proud.

To summarize, with just a few lines of code in PyAQ, we created an app that generates a detailed damage assessment report by inspecting an image of a car accident. This app can now be deployed on AnyQuest and invoked from an insurance workflow process automated on ServiceNow or any other platform.

You can find the source code for this and many other examples at https://github.com/anyquest/pyaq.git.